After the appearance of AJAX or, as it is now called, API Fetch, emerge the SPA frameworks (Single Page Application), which through the use of Fetch requests, generate part of the HTML in the client, which prevents the spiders from correctly indexing everything the content and, above all, trace it, because in addition, this type of webs, can be implemented without trackable links through the use of URLs with fragments (use of #) or directly without using URLs in the links (which only work for the user when clicking on).

Make your WordPress site’s Load Blazing Fast Just by moving to Nestify. Migrate your WooCommerce Store or WordPress Website NOW.

With the designation “SPA” comes the term Multiple Page Application (MPA), to refer to the classic frameworks that generate all the HTML on the server, which is what the spiders need to be able to index and track the pages without problems.

There are many frameworks of type SPA: by Google, we have Angular (formerly called AngularJS), by Facebook, React, and a host of other open sources such as Vue, Meteor, Loop, Render, Ember, Aurelia, Backbone, Knockout, Mercury, etc. These frameworks initially could only be executed on the client, but we will see later that the best solution is that it is not so.

How does a SPA framework work without Universal JavaScript?

As I have already mentioned, SPA frameworks are based on the use of the Fetch API since they work by loading in the browser next to a shell that contains the parts that do not change during navigation, in addition to a series of HTML templates, that will be filled with the responses of the Fetch requests and will be sent to the server. It is necessary to differentiate how this happens in the first request to the web and the navigation through the links once it has been loaded since the operation is different:

- First request: The Shell is sent over the network. Then, with one or several Fetch requests (which also travel over the web), the data is obtained to generate the HTML of the page’s main content. Consequently, the first load becomes slower due to the transfer of requests through the network, which is what usually takes longer. Therefore, in a first load, it is faster to send all the HTML generated in the server in a single request, as MPA frameworks do.

- Load the following pages after the first request: in SPA frameworks, the load is much more fluid and faster since the entire HTML does not have to be generated. In addition, you can add transitions or load bars between the display of one page and another, which gives a feeling of more incredible speed. However, we have a significant added problem. When generating part of the HTML in the client with JavaScript after a Fetch request is made with JavaScript, the spiders cannot index these pages, especially when the user can reach pages where the spider does not even have a URL that it can index.

How was a SPA framework tried to be indexable before Universal JavaScript?

Initially, the idea of using a tool on the server that acts as a browser appears. This browser on the server would go into action at the request of a spider. It would work in the following way: first, we would detect that the submission comes from the spider filtering it by the user agent of the same (for example, “Googlebot”), and then we pass it to a kind of browser within the server itself. This, in turn, would request that URL to the web service within the same server. Then, retrieving the request’s response to the web service, it would execute the JavaScript, which would make the Fetch requests and generate the entire HTML so that we can return it to the spider and cache it. Thus, the following submission of the spider to that URL will be returned from the cache and, therefore, will work faster.

To do this well, in the links that launch Fetch requests, there must be friendly URLs (ancient techniques such as URLs with hashbang “#!” Must not be used), and when the user clicks on a link, the developer must paint the same URL with JavaScript, using the history API. This way, we ensure that the user can share and save that URL in favorites. This URL must return the entire page when the spider requests to the server.

This is not a good technique because it presents the following problems:

- We are doing cloaking, so our website will only be indexed with the spiders that we have filtered.

- If we do not have the HTML cached, the spider will notice the prolonged loading time.

- If we want the spider to perceive a faster loading time, we will have to generate a cache with the HTML of all the URLs, which implies having a cache invalidation policy. This may be unfeasible for the following reasons:

- That the information must be continuously updated.

- That the time to generate the entire cache is unaffordable.

- We do not have space on the server to store all the pages in the stock.

- That we do not have the processing capacity to be able to generate the supply and keep the page online at the same time.

- Remember that the problem of cache invalidation is very complex since the cache must be updated when something changes in the database. However, in the cache, it is not easy to erase precisely the data that has been updated. Since not being a database but something more straightforward and faster, we cannot easily select what we want to regenerate, so they are followed strategies that erase more than necessary or leave inconsistent data. Depending on the case, these problems may prevent opting for this solution.

- Finally: The tools that act as a browser on the server are paid.

How to make a SPA framework indexable with Universal JavaScript?

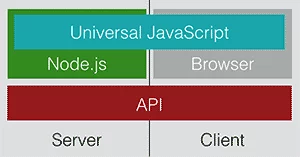

The idea of Universal JavaScript, or JavaScript isomorphic (as it was initially called), arises in the SPA framework of Facebook (React) and consists of using a universal API that uses JavaScript client APIs from below or JavaScript server APIs—With Node.JS, depending on whether this ubiquitous API is executed on the client or the server, respectively. In this way, when writing JavaScript code using this API, we can run it on both the client and the server. If we link this to a SPA framework that is designed to work only on the client, we have a universal framework that can work both on the client and the server as follows:

First of all, we must bear in mind that we can distinguish between three different types of JavaScript code in our web development, depending on where it is going to be executed:

- Only in the client.

- Only on the server, although this JavaScript could be replaced by any server language such as PHP.

- Both in the client and the server (Universal JavaScript).

If we use JavaScript in the code block that runs only on the server, we will be using this language for all three cases; therefore, it is said that we use a Full-Stack framework.

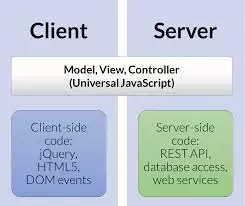

Just like when we did not have Universal JavaScript, the behavior will be different for the first request and the following ones:

- First request: Regardless of whether the request comes from a spider or a user, the entire HTML will be generated on the server using the Universal JavaScript block that throws Fetch requests to the JavaScript that runs only on the server. The operation is similar to when Universal JavaScript is not used, but with the difference that Fetches requests are made from the server to itself and not from the client, saving the initial transfer of appeals through the network.

- Load the following pages after the first request: If a user clicks on a link, the JavaScript that runs only on the client will intercept the click and pass the request to the Universal JavaScript (the same as the previous point). This will make a Fetch request to the JavaScript that runs only on the server, with the requested URL, and when retrieving the data from the server, it will show the new page to the user. In this case, the Fetch request goes from the client to the server, preventing the entire page from reloading.

This way, we have pages that work fast both in the first load and during the subsequent navigation, and the spiders have no problems indexing them because they will always generate the entire page on the server without cloaking.

Conclusion

If a development company offers you a website with Angular, React, or another SPA development framework, make sure that they know Universal JavaScript and that they have a project that is being indexed correctly since they may not be aware of its existence or not know how to use it since it is not uncommon for them to use an old version of the framework that does not have Universal JavaScript. In Angular, for example, it initially appeared as an independent addition called Universal Angular, and later, it was incorporated into the framework. On the other hand, if you know it, there will be no problem with the site’s indexability.

Another story is whether they know JavaScript, the frameworks, and the problems of this type of web well enough to make the code maintainable and that errors are easy to try and fix. A good indication that they know what they are facing may be to see if they use other frameworks, along with those already mentioned, to handle the states of the application, such as Redux or Ngrx, since this is a task that without this type of additional frameworks, can derive in code with little maintainability.