Have you worked hard to build your site and cannot wait to find it on the top of Google, but despite all the efforts it cannot even get in the first 10 pages? If you’re sure that your site deserves better ranking, the problem may be the “crawlability”.

What is “crawlability”? Search engines use search bots to collect certain information about the pages. The procedure for collecting this information is called “crawling.” Based on this information the search engines include the pages in their search lists, which means that the page can be found per user. The crawlability of the website is a term that describes the accessibility of the site to the bots of search engines. You need to make sure that the search bots can find the pages of your site, gain access and read them.

Let’s break down possible crawlability issues into two categories – those that can be solved by any user and those that require the attention of a developer or system administrator. Of course, everyone has different training, skills, and knowledge, so this categorization is approximate.

Speaking of “problems that any user can solve,” we refer to problems that can be solved by accessing the page code or the root files. Basic programming skills (for example, to change or replace a piece of code in the right place and in the right way) may be necessary to solve this type of situation.

Already the problems that would be best solved with the help of a specialist are those that need the attention of people with knowledge in server administration and/or web development.

Crawler blocked by meta tags or robots.txt

This type of problem is the easiest to detect and resolve by checking your meta tags and the robots.txt file, so we recommend you start the analysis of possible issues here. The entire site or a few pages remain out of Google’s reach simply because search bots were banned from entering.

There are some commands that prevent bots from crawling pages. But note – using these commands in the robots.txt file is not always an error. When used with care and knowledge these parameters indicate the best direction to the bots, facilitating the tracking and guiding them exclusively through the pages that you want them to be tracked.

1. Locking the index page with a meta tag

If you choose to include this command, the search bot will completely ignore the page, moving on to the next.

This case can be detected by verifying that the codes on your page contain this directive:

<meta name = “robots” content = “noindex” />

2. Links “no follow”

In this case, the search engine bot will index the content of the page, but will not follow the links. There are two types of “no follow” directive:

A: For the entire page – check for <meta name = “robots” content = “nofollow”> in the page code – this means that the bot cannot follow any links on that page.

B: For a single link – in this case, the code is: href = “pagename.html” rel = “nofollow” />

3. Blocking index pages with robots.txt

Robots.txt is the first file on your site that gets visited by the search bot. The worst you can find in this file is:

<span style="font-weight: 400;">User-agent: *</span> <span style="font-weight: 400;">Disallow: /</span>

This means that all pages on the site are blocked from indexing.

It may happen that only a few pages or sections are blocked, for example:

<span style="font-weight: 400;">User-agent: *</span> <span style="font-weight: 400;">Disallow: /products/</span>

In this case, any page that belongs to the Products subcategory is indexed, and none of the descriptions of your products will be visible to Google.

Problems caused by broken links

Broken links mean a bad experience for your users and as well as for search bots. Each page that the bot is indexing (or trying to index) is an expense of the tracking budget. That is, if you have many broken links, the search bot will waste a lot of time trying to index them and the bot will not have time to index the quality pages. The Trace Errors report in Google Search Console can help you identify this type of problem.

4. URL Errors

URL errors are usually caused by a typo that happens when you insert the link on your page. Check that all links are spelled correctly.

5. Outdated URLs

If you’ve recently been through a site migration, cleanup, or URL structure change, you need to carefully check this issue. Make sure that there is no link that leads to a page that does not exist or belongs to the old structure.

6. Pages with Access Denied

If you notice that many pages have, for example, code 403, it is possible that these pages are only available to registered users. Mark these links with “no follow” tag so you do not waste your time and money.

Broken links caused by server problems

7. Server Errors

Most errors with 5xx code (for example, 502) can be a sign of problems on the server. To resolve them, show the list of pages with errors to the person responsible for developing and maintaining the website.

8. Limited server capacity

When your server is overloaded it may stop responding to user requests and bots. When this happens, your visitors receive a message – “Connection timed out”. This issue should be resolved in conjunction with the site maintenance specialist. It will estimate how the server’s capacity can be increased if appropriate.

9. Server Configuration Errors

This problem can be tricky. The site may be perfectly visible to users and at the same time display an error message to the search bot, making all pages unavailable for indexing. This is because of some specific server settings: some firewalls (for example, Apache mod_security) block the Google bot and other bots by default. This problem can only be solved by a specialist.

Sitemap errors

The Sitemap, together with the robots.txt file, is responsible for the site’s first impression for the search bots. A correct sitemap recommends bots to index the site the way you would like the site to be indexed. We will see what can go wrong when a search engine bot starts crawling your sitemaps.

10. Formatting Errors

There are several types of formatting errors, for example, invalid URLs or missing tags.

You may also find that the sitemaps file is being blocked by the robots.txt file. This means that the bot could not access your site content.

11. Wrong sitemap pages

We’ll move on to the content. Even those who are not web development specialists can evaluate the relevance of URLs in the sitemap. Make sure that each link in the sitemap is relevant, up-to-date, and correct (it does not contain typos). Since the tracking budget is limited and bots cannot get through the whole site, Sitemap alerts them to crawl the most important pages.

Do not give controversial instructions to the bot: make sure that the URLs of your sitemap are not blocked from indexing by meta tags or robots.txt.

Site architecture issues

The problems of this category are the most difficult to solve. Therefore we recommend checking the previous steps before investigating the next problems.

Website architecture issues can disorient bots or prevent them from entering your site

12. Internal Link Problems

In a properly optimized website structure, all the pages were an indestructible stream, so that the search bot can easily access each page.

In a non-optimized site, some pages tend to get out of sight of the bots. There are several reasons for this and all can be detected and categorized with the help of the SEMrush Site Audit tool.

- The page you’d like to put on the first few pages of Google is not linked to any other page on your site. In this case, the search bot cannot find it and index.

- Over 3,000 active links on a single page (this is a lot of work for the bot)

- Links are hidden in a site element that cannot be indexed (subscription forms, frames, plug-ins (Java and Flash first))

In most cases, the problem of internal linking is not something that can be quickly solved. A thorough analysis of the site structure will be required.

13. Redirecting problems

Redirects are needed to get users to more relevant pages (or, rather, to the page that the site owner thinks is most relevant). Here are the main points of attention that can lead to indexing problems:

- Temporary redirects instead of permanent redirects: Using 302 and 307 redirects cause the bot to go back to the page more and more often by spending the tracking budget. In this case, if you find that the original page does not need to be indexed anymore, use the 301 (permanent) redirect.

- Cycle Redirection: Two pages may be redirected to one another, creating a cycle. The bot goes into a loop and spends the entire tracking budget. Check and remove possible redirection cycles.

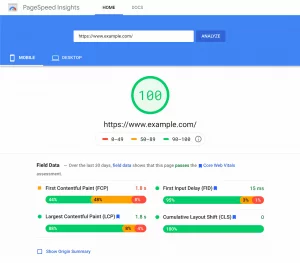

14. Low loading speed

The faster the page loads, the faster the bot passes through them. Every second is important. The position of the site on the search engines is directly related to the loading speed.

Use Google Pagespeed Insights to find out if your site is fast enough. If not, it’s time to find out the reason for this slowness.

- Server-Related Factors: Your site may be slow for a very simple reason – the current channel width is no longer sufficient. You can check the width of your channel in the description of your plan.

- Front-end factors: One of the most frequent factors is non-optimized code. If it contains bulky scripts and plug-ins, your site is at risk. Do not forget to optimize images, videos and other content, so they do not add to the page’s slowness.

15. Duplicate pages (poor site architecture results)

Duplicate content is one of the most frequent SEO problems found on 50% of websites (according to SEMrush’s survey “11 Most Common SEO Problems.” That’s one of the main reasons that make you deplete your tracking budget. Google allocates a limited time to each site. It would be a waste to spend it indexing the same content.

Another difficulty – bots do not know which copy to prioritize, so they end up giving priority to the wrong pages.

To fix the damage you need to identify duplicate pages and prevent the bot from tracking them in one of the following ways:

- Delete duplicate pages

- Configure required parameters in the robots.txt file

- Set up required parameters in meta tags

- Set up a 301 redirect

- Use rel = canonical

16. Using JS and CSS

In 2015, Google officially declared, “If you do not block Googlebot from crawling your Java and CSS files, we can usually read and understand the pages“. This does not work for other search engines (Yahoo, Bing, etc.) and also indicates that in some cases correct indexing is not guaranteed even for Google.

Outdated Technologies

17. Flash Content

Usually using Flash is not recommended to not to create a bad experience for the user (Flash files do not appear correctly on some of the mobile devices). But this can also hurt your SEO. Text content or a link within a Flash element has little chance of being indexed by search bots.

Our suggestion is not to use these elements.

18. HTML Frames

If your site has frames, we have good and bad news for you.

The good news is that the site with frames is a mature site. And the bad news is that HTML frames are quite outdated and are not well indexed by search bots. The suggestion is to replace the frames with a more modern solution.

Conclusion: Focus on the action!

Not always the reason for your low ranking on Google is in poorly chosen keywords or in the content itself. Even a perfectly optimized page can fall outside Google’s radar or gain the highest rankings if content cannot reach the bots because of crawlability issues.

To understand what is blocking or disorienting the bot, you will need to review your entire domain. It’s too hard to do this manually. That’s why we recommend entrusting routine tasks to the appropriate tools. Major sites audit platforms help identify, categorize, and prioritize problems; allowing you to start acting as soon as you receives the report. And one more thing – many tools let you save data from previous audits, which helps you compare your site’s performance to previous time periods.

Know some more factors that influence the crawlability of a website? Do you use any tool to optimize and solve problems in a faster way? Share with us in the comments!

Readers note: If your website is slow and you are planning to switch to another Webhost, check out our Fully Managed VPS Hosting Service that can make your website 30x faster!